William

Thong

Profile

At Sony AI, William is a research scientist in the AI Ethics research flagship. Prior to joining Sony AI, he was a Ph.D. researcher in computer vision at the University of Amsterdam, under the supervision of Cees Snoek (VIS lab). William’s Ph.D. research involved visual search, learning with limited labels, and model biases. Previously, he completed an M.Sc. in biomedical engineering at Polytechnique Montréal, under the supervision of Samuel Kadoury (MedICAL lab) and Chris Pal (Mila). During his graduate studies, William was supported by several scholarships from the Natural Sciences and Engineering Research Council of Canada.

Message

“My work at Sony AI currently focuses on algorithm fairness to enable a better assessment of potential biases and their mitigation in computer vision problems.”

Publications

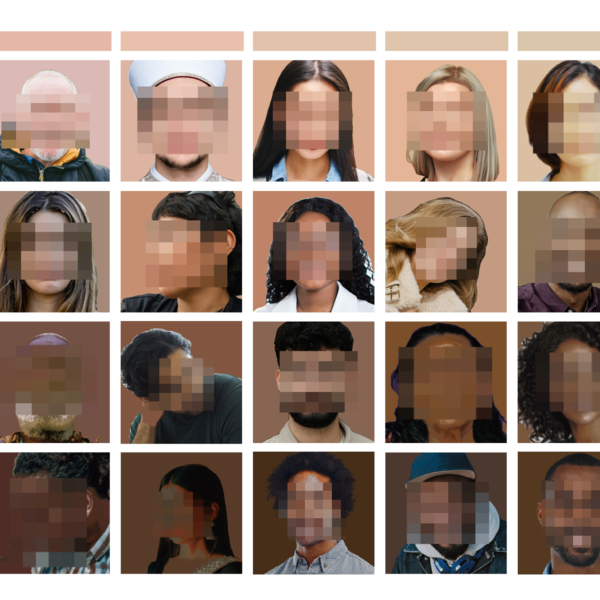

Human-centric computer vision (HCCV) data curation practices often neglect privacy and bias concerns, leading to dataset retractions and unfair models. HCCV datasets constructed through nonconsensual web scraping lack crucial metadata for comprehensive fairness and robustnes…

This paper considers retrieval of videos containing human activity from just a video query. In the literature, a common assumption is that all activities have sufficient labelled examples when learning an embedding for retrieval. However, this assumption does not hold in pra…

This paper strives to measure apparent skin color in computer vision, beyond a unidimensional scale on skin tone. In their seminal paper Gender Shades, Buolamwini and Gebru have shown how gender classification systems can be biased against women with darker skin tones. While…

Human-centric image datasets are critical to the development of computer vision technologies. However, recent investigations have foregrounded significant ethical issues related to privacy and bias, which have resulted in the complete retraction, or modification, of several …

Image quality assessment (IQA) forms a natural and often straightforward undertaking for humans, yet effective automation of the task remains highly challenging. Recent metrics from the deep learning community commonly compare image pairs during training to improve upon trad…

This paper strives to address image classifier bias, with a focus on both feature and label embedding spaces. Previous works have shown that spurious correlations from protected attributes, such as age, gender, or skin tone, can cause adverse decisions. To balance potential …

Blog

January 18, 2024 | Events

Navigating Responsible Data Curation Takes the Spotlight at NeurIPS 2023

The field of Human-Centric Computer Vision (HCCV) is rapidly progressing, and some researchers are raising a red flag on the current ethics of data curation. A primary concern is that today’s practices in HCCV data curation – whic…

The field of Human-Centric Computer Vision (HCCV) is rapidly progressing, and some researchers are raising a red flag on the curre…

December 13, 2023 | Events

Sony AI Reveals New Research Contributions at NeurIPS 2023

Sony Group Corporation and Sony AI have been active participants in the annual NeurIPS Conference for years, contributing pivotal research that has helped to propel the fields of artificial intelligence and machine learning forwar…

Sony Group Corporation and Sony AI have been active participants in the annual NeurIPS Conference for years, contributing pivotal …

September 21, 2023 | AI Ethics

Beyond Skin Tone: A Multidimensional Measure of Apparent Skin Color

-->Advancing Fairness in Computer Vision: A Multi-Dimensional Approach to Skin Color Analysis In the ever-evolving landscape of artificial intelligence (AI) and computer vision, fairness is a principle that has gained substantial …

-->Advancing Fairness in Computer Vision: A Multi-Dimensional Approach to Skin Color Analysis In the ever-evolving landscape of ar…

JOIN US

Shape the Future of AI with Sony AI

We want to hear from those of you who have a strong desire

to shape the future of AI.